Curt-Park/rainbow-is-all-you-need

Rainbow is all you need! A step-by-step tutorial from DQN to Rainbow

| repo name | Curt-Park/rainbow-is-all-you-need |

| repo link | https://github.com/Curt-Park/rainbow-is-all-you-need |

| homepage | |

| language | Jupyter Notebook |

| size (curr.) | 4856 kB |

| stars (curr.) | 664 |

| created | 2019-06-10 |

| license | MIT License |

Do you want a RL agent nicely moving on Atari?

Rainbow is all you need!

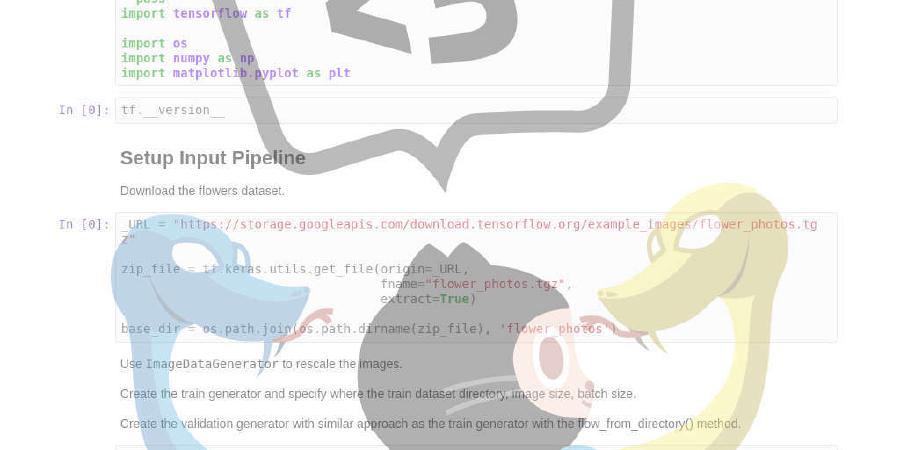

This is a step-by-step tutorial from DQN to Rainbow. Every chapter contains both of theoretical backgrounds and object-oriented implementation. Just pick any topic in which you are interested, and learn! You can execute them right away with Colab even on your smartphone.

Please feel free to open an issue or a pull-request if you have any idea to make it better. :)

If you want a tutorial for policy gradient methods, please see PG is All You Need.

Contents

- DQN [NBViewer] [Colab]

- DoubleDQN [NBViewer] [Colab]

- PrioritizedExperienceReplay [NBViewer] [Colab]

- DuelingNet [NBViewer] [Colab]

- NoisyNet [NBViewer] [Colab]

- CategoricalDQN [NBViewer] [Colab]

- N-stepLearning [NBViewer] [Colab]

- Rainbow [NBViewer] [Colab]

Prerequisites

This repository is tested on Anaconda virtual environment with python 3.6.1+

$ conda create -n rainbow_is_all_you_need python=3.6.1

$ conda activate rainbow_is_all_you_need

Installation

First, clone the repository.

git clone https://github.com/Curt-Park/rainbow-is-all-you-need.git

cd rainbow-is-all-you-need

Secondly, install packages required to execute the code. Just type:

make dep

Related Papers

- V. Mnih et al., “Human-level control through deep reinforcement learning.” Nature, 518 (7540):529–533, 2015.

- van Hasselt et al., “Deep Reinforcement Learning with Double Q-learning.” arXiv preprint arXiv:1509.06461, 2015.

- T. Schaul et al., “Prioritized Experience Replay.” arXiv preprint arXiv:1511.05952, 2015.

- Z. Wang et al., “Dueling Network Architectures for Deep Reinforcement Learning.” arXiv preprint arXiv:1511.06581, 2015.

- M. Fortunato et al., “Noisy Networks for Exploration.” arXiv preprint arXiv:1706.10295, 2017.

- M. G. Bellemare et al., “A Distributional Perspective on Reinforcement Learning.” arXiv preprint arXiv:1707.06887, 2017.

- R. S. Sutton, “Learning to predict by the methods of temporal differences.” Machine learning, 3(1):9–44, 1988.

- M. Hessel et al., “Rainbow: Combining Improvements in Deep Reinforcement Learning.” arXiv preprint arXiv:1710.02298, 2017.

Contributors

Thanks goes to these wonderful people (emoji key):

This project follows the all-contributors specification. Contributions of any kind welcome!