dipanjanS/adversarial-learning-robustness

Contains materials for workshops pertaining to adversarial robustness in deep learning.

| repo name | dipanjanS/adversarial-learning-robustness |

| repo link | https://github.com/dipanjanS/adversarial-learning-robustness |

| homepage | |

| language | Jupyter Notebook |

| size (curr.) | 70351 kB |

| stars (curr.) | 44 |

| created | 2020-10-19 |

| license | GNU General Public License v3.0 |

Adversarial Robustness in Deep Learning

Contains materials for workshops pertaining to adversarial robustness in deep learning.

Outline

The following things are covered -

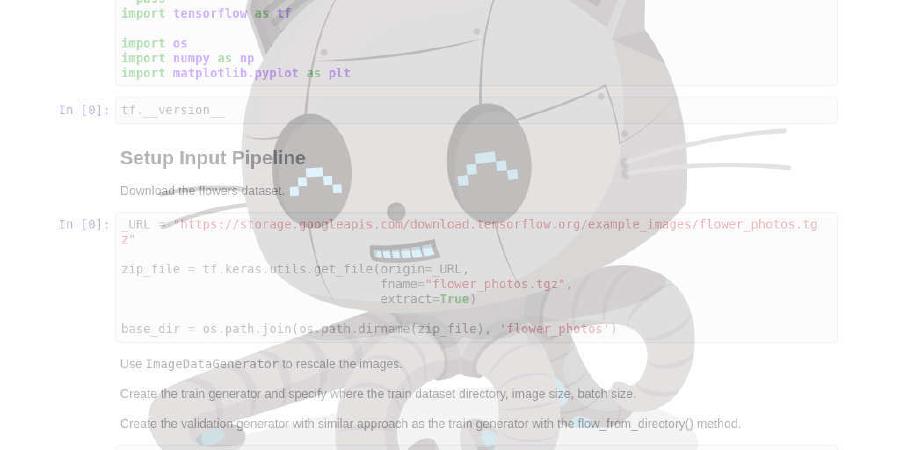

- Deep learning essentials

- Introduction to adversarial perturbations

- Natural [8]

- Synthetic [1, 2]

- Simple Projected Gradient Descent-based attacks

- Targeted Projected Gradient Descent-based attacks

- Fast Gradient Sign Method (FGSM) attacks

- Optimizer susceptibility w.r.t to different attacks

- Adversarial learning

- Training on a dataset perturbed with FGSM

- Training with Neural Structured Learning [3]

- Improving adversarial performance with EfficientNet [4] and its variants like Noisy Student Training [5] and AdvProp [6]

Note that this repository is still in its nascent stage. Over time we will be adding more materials on improving performance with Smooth Adversarial Training [7], text-based attacks, and some notes on the interpretability aspects of adversarial robustness. Also, the materials presented here are solely meant for educational purposes and aren’t meant to be used otherwise.

We provide Jupyter Notebooks to demonstrate the topics mentioned above. These notebook are fully runnable on Google Colab without any non-trivial configurations.

How to run the notebooks?

The notebooks are fully runnable on Google Colab. Here are the steps -

- First, get the Open in Colab Google Chrome extension.

- Follow this screencast that shows how to navigate to a particular notebook inside this repository and open it in Google Colab.

Major libraries used

- TensorFlow (2.3)

- Neural Structured Learning

References

- I. Goodfellow, J. Shlens, C. Szegedy, “Explaining and Harnessing Adversarial Examples,” ICLR 2015.

- T. Miyato, S. Maeda, M. Koyama and S. Ishii, “Virtual Adversarial Training: A Regularization Method for Supervised and Semi-Supervised Learning,” IEEE Transactions on Pattern Analysis and Machine Intelligence 2019.

- “Neural Structured Learning.” TensorFlow, https://www.tensorflow.org/neural_structured_learning.

- Tan, Mingxing, and Quoc V. Le. “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks.” ArXiv:1905.11946 [Cs, Stat], Sept. 2020. arXiv.org, http://arxiv.org/abs/1905.11946.

- Xie, Qizhe, et al. “Self-Training with Noisy Student Improves ImageNet Classification.” ArXiv:1911.04252 [Cs, Stat], June 2020. arXiv.org, http://arxiv.org/abs/1911.04252.

- Xie, Cihang, et al. “Adversarial Examples Improve Image Recognition.” ArXiv:1911.09665 [Cs], Apr. 2020. arXiv.org, http://arxiv.org/abs/1911.09665.

- Xie, Cihang, et al. “Smooth Adversarial Training.” ArXiv:2006.14536 [Cs], June 2020. arXiv.org, http://arxiv.org/abs/2006.14536.

- Hendrycks, Dan, et al. “Natural Adversarial Examples.” ArXiv:1907.07174 [Cs, Stat], Jan. 2020. arXiv.org, http://arxiv.org/abs/1907.07174.