eragonruan/text-detection-ctpn

text detection mainly based on ctpn model in tensorflow, id card detect, connectionist text proposal network

| repo name | eragonruan/text-detection-ctpn |

| repo link | https://github.com/eragonruan/text-detection-ctpn |

| homepage | |

| language | Python |

| size (curr.) | 352487 kB |

| stars (curr.) | 2806 |

| created | 2017-09-20 |

| license | MIT License |

text-detection-ctpn

Scene text detection based on ctpn (connectionist text proposal network). It is implemented in tensorflow. The origin paper can be found here. Also, the origin repo in caffe can be found in here. For more detail about the paper and code, see this blog. If you got any questions, check the issue first, if the problem persists, open a new issue.

NOTICE: Thanks to banjin-xjy, banjin and I have reonstructed this repo. The old repo was written based on Faster-RCNN, and remains tons of useless code and dependencies, make it hard to understand and maintain. Hence we reonstruct this repo. The old code is saved in branch master

roadmap

- reonstruct the repo

- cython nms and bbox utils

- loss function as referred in paper

- oriented text connector

- BLSTM

setup

nms and bbox utils are written in cython, hence you have to build the library first.

cd utils/bbox

chmod +x make.sh

./make.sh

It will generate a nms.so and a bbox.so in current folder.

demo

- follow setup to build the library

- download the ckpt file from googl drive or baidu yun

- put checkpoints_mlt/ in text-detection-ctpn/

- put your images in data/demo, the results will be saved in data/res, and run demo in the root

python ./main/demo.py

training

prepare data

- First, download the pre-trained model of VGG net and put it in data/vgg_16.ckpt. you can download it from tensorflow/models

- Second, download the dataset we prepared from google drive or baidu yun. put the downloaded data in data/dataset/mlt, then start the training.

- Also, you can prepare your own dataset according to the following steps.

- Modify the DATA_FOLDER and OUTPUT in utils/prepare/split_label.py according to your dataset. And run split_label.py in the root

python ./utils/prepare/split_label.py

- it will generate the prepared data in data/dataset/

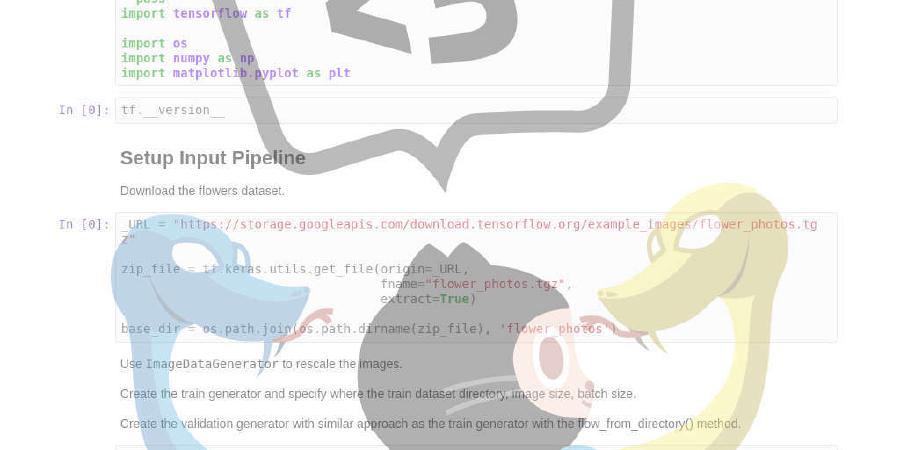

- The input file format demo of split_label.py can be found in gt_img_859.txt. And the output file of split_label.py is img_859.txt. A demo image of the prepared data is shown below.

train

Simplely run

python ./main/train.py

- The model provided in checkpoints_mlt is trained on GTX1070 for 50k iters. It takes about 0.25s per iter. So it will takes about 3.5 hours to finished 50k iterations.

some results

NOTICE: all the photos used below are collected from the internet. If it affects you, please contact me to delete them.

oriented text connector

- oriented text connector has been implemented, i’s working, but still need futher improvement.

- left figure is the result for DETECT_MODE H, right figure for DETECT_MODE O