awslabs/aws-data-wrangler

Pandas on AWS

| repo name | awslabs/aws-data-wrangler |

| repo link | https://github.com/awslabs/aws-data-wrangler |

| homepage | https://aws-data-wrangler.readthedocs.io |

| language | Python |

| size (curr.) | 3524 kB |

| stars (curr.) | 617 |

| created | 2019-02-26 |

| license | Apache License 2.0 |

AWS Data Wrangler

Pandas on AWS

NOTE

We just released a new major version 1.0 with breaking changes. Please make sure that all your old projects has dependencies frozen on the desired version (e.g. pip install awswrangler==0.3.2).

| Source | Downloads | Page | Installation Command |

|---|---|---|---|

| PyPi |  |

Link | pip install awswrangler |

| Conda |  |

Link | conda install -c conda-forge awswrangler |

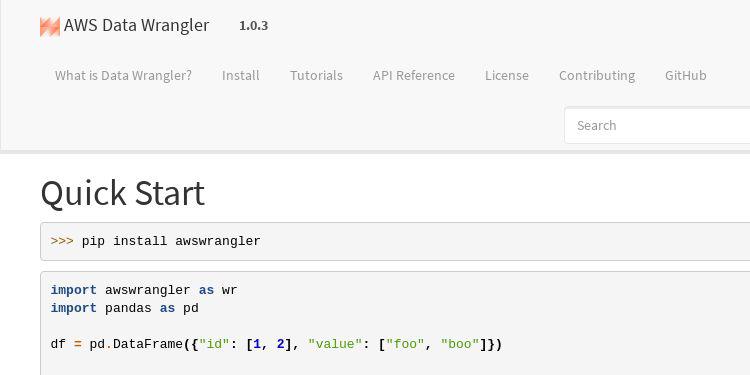

Quick Start

Install the Wrangler with: pip install awswrangler

import awswrangler as wr

import pandas as pd

df = pd.DataFrame({"id": [1, 2], "value": ["foo", "boo"]})

# Storing data on Data Lake

wr.s3.to_parquet(

df=df,

path="s3://bucket/dataset/",

dataset=True,

database="my_db",

table="my_table"

)

# Retrieving the data directly from Amazon S3

df = wr.s3.read_parquet("s3://bucket/dataset/", dataset=True)

# Retrieving the data from Amazon Athena

df = wr.athena.read_sql_query("SELECT * FROM my_table", database="my_db")

# Getting Redshift connection (SQLAlchemy) from Glue Catalog Connections

engine = wr.catalog.get_engine("my-redshift-connection")

# Retrieving the data from Amazon Redshift Spectrum

df = wr.db.read_sql_query("SELECT * FROM external_schema.my_table", con=engine)

Read The Docs

- What is AWS Data Wrangler?

- Install

- Tutorials

- 01 - Introduction

- 02 - Sessions

- 03 - Amazon S3

- 04 - Parquet Datasets

- 05 - Glue Catalog

- 06 - Amazon Athena

- 07 - Databases (Redshift, MySQL and PostgreSQL)

- 08 - Redshift - Copy & Unload.ipynb

- 09 - Redshift - Append, Overwrite and Upsert

- 10 - Parquet Crawler

- 11 - CSV Datasets

- 12 - CSV Crawler

- 13 - Merging Datasets on S3

- API Reference

- License

- Contributing